ChatWithGS7: Enabling the front-end experience.

In this post we will look at how to transform the Python based ChatWithGS7 (that uses the HERE Geocoding & Search API) chatbot into React application, we will be discussing the benefits of doing so and we will run a few fun queries with it. Also, we will hear from Michael Kaisser, the bot creator, on the nuances of ChatWithGS7.

How did we get here?

In the earlier post I worked with Anaconda and python code developed by Michael. We interacted with the chatbot and while the results were satisfactory, I decided to take the challenge of transferring the code to React. I will admit, python is not my first choice when it comes to programming languages. I gravitated toward JavaScript early in my career, and since then, I have become a strong advocate for React.

Now, having decided to rewrite ChatWithGS7 in React, I quickly realized the advantages of doing so, both in terms of user experience and technical flexibility.

Why Rewrite in React?

There are a few key benefits of rewriting ChatWithGS7 for the front end using React:

- User-friendly input: For users who are less comfortable with command-line interfaces, React enables us to create clean, intuitive input fields. These provide a much more approachable way to interact with the chatbot compared to Python's terminal-based input.

- Better error handling: React allows us to implement error handling directly in the browser. Using JavaScript’s fetch API with built-in try-catch blocks, users receive clear feedback when an API call fails, making the experience much smoother.

- Dynamic interaction: With React, we can dynamically update the user interface without reloading the page or manually triggering events. This allows users to interact with the app in real-time, creating a more seamless and interactive experience.

Transferring ChatWithGS7 to React application

When I first saw the Python code powering ChatWithGS7, my initial thought was: "Uff, this is in Python." However, Michael's python code is well structured and easy to read. So, transferring it to React will not be a Herculean task.

Here is how I went about transferring ChatWithGS7 to React:

Starting with imports, Michael uses os (operating system), requests (for sending out api requests), OpenAI (accessing OpenAI API), ssl (wrapper for socket objects)

As I am not sending requests from the desktop environment but using browser, operating system modules and ssl are not necessary. Also, I am using native JavaScript .fetch() for calling the APIs. The imports in React transcribes to:

import OpenAI from "openai";

import { useState } from "react"; //for simple state management

Identifying API Calls: ChatWithGS7 primarily makes two API requests, one to OpenAI’s GPT model and another to the HERE Discover API.

I name these functions callHereDiscoverEndpoint to call Discover endpoint and handleUserQuery to send out the request to OpenAI when button is clicked.

const callHereDiscoverEndpoint = async (callString) => {

callString += `&apiKey=${import.meta.env.VITE_HERE_API_KEY}`;

try {

const response = await fetch(callString);

const data = await response.json();

return data;

} catch (error) {

console.error("Error calling HERE API:", error);

}

};

const handleUserQuery = async () => {

try {

const completion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: system_instructions_1,

},

{ role: "user", content: userQuery },

],

});

const generatedDiscoverQuery = completion.choices[0].message.content;

setDiscoverQuery(generatedDiscoverQuery);

const result = await callHereDiscoverEndpoint(generatedDiscoverQuery);

const simplifiedResult = simplifyDiscoverResult(result);

setDiscoverResult(simplifiedResult);

const simplifiedResultString = JSON.stringify(simplifiedResult).slice(

0,

3500

);

const finalCompletion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: system_instructions_2.replace("#1#", userQuery),

},

{ role: "user", content: simplifiedResultString },

],

});

const finalResponse = finalCompletion.choices[0].message.content;

setResponseText(finalResponse);

} catch (error) {

console.error("Error processing the query:", error);

}

};

system_instructions_1 and system_instructions_2 are defined. See how these instructions are passed in the completion and finalCompletion constants.

const system_instructions_1 = `Translate the user prompt to a full API call to HERE's discover endpoint "

(https://discover.search.hereapi.com/v1/discover). \

Make sure to include the at and q parameter, but omit the apiKey parameter. \

Remember that the at parameter should contain coordinates.\

Your response should only contain the API call, in one line and nothing else.`;

const system_instructions_2 = `Create an informative and helpful English prompt suitable for a TTS that answers the user \

question '#1#' solemnly based on the JSON object that is provided. \

The prompt should be a direct answer to the question. \

(The JSON is a response from https://discover.search.hereapi.com/v1/discover). \

Do not mention JSON or that the information is from a JSON object.`;

I have often heard that switching from Python to JavaScript can be tricky because of JavaScript's asynchronous nature. But trust me—once you get the hang of it, you will love the flexibility and power it offers!

Handling API Responses: After receiving the responses from the HERE Discover API, results are simplified before displaying them in the app. Keeping just the title and address in the response Object makes the output more readable for the user:

const simplifyDiscoverResult = (response) => {

return response.items.map((item) => {

const { title, address } = item;

return { title, address };

});

};

And this is how the result is simplified and passed to the screen:

const result = await callHereDiscoverEndpoint(generatedDiscoverQuery);

const simplifiedResult = simplifyDiscoverResult(result);

setDiscoverResult(simplifiedResult);

const simplifiedResultString = JSON.stringify(simplifiedResult).slice(

0,

3500

);

const finalCompletion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: system_instructions_2.replace("#1#", userQuery),

},

{ role: "user", content: simplifiedResultString },

],

});

const finalResponse = finalCompletion.choices[0].message.content;

setResponseText(finalResponse);

SimplifiedResultString then is made not exceeding 3500 characters before passed in the finalCompletion which then creates the finalResponse and sets response text in the input box.

Challenges and How I Solved Them

By default, OpenAI restricts API calls directly from the browser due to security concerns. To bypass this for testing purposes, I used the dangerouslyAllowBrowser: true setting. However, this is not suitable for production. In case you want to replicate this application for production you should seriously consider alternatives for securing your API keys in a live environment.

const openai = new OpenAI({

apiKey: import.meta.env.VITE_OPENAI_API_KEY,

dangerouslyAllowBrowser: true

});

try-catch blocks around the API calls catch errors and display them directly to the user. This makes the app more user-friendly by providing immediate feedback if something goes wrong.

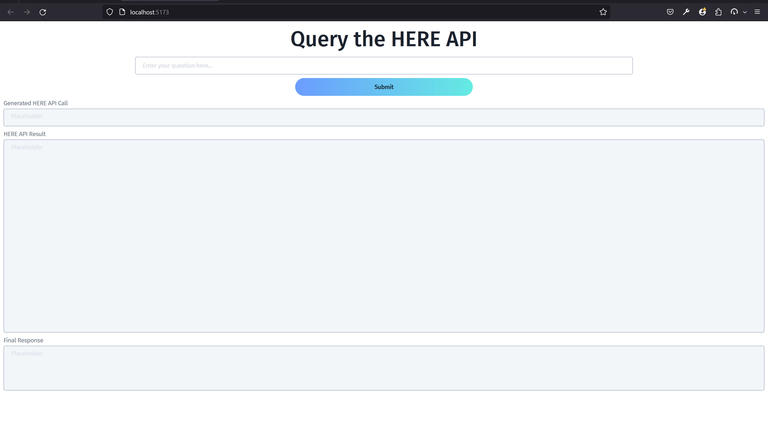

Making the UI Friendly

If you are looking to take this project further, improving the UI can make a significant difference. Using libraries like Material UI or DaisyUI you can create a more polished and responsive design.

In my case, I enhanced the app using our own HERE Design System, and it is amazing how nicely everything has come together! Replace text input, button and text area with your own to get the app working and pay attention to how the setUserQuery, handleUserQuery, discoverResult and responseText are passed in the components.

It may seem like a small step for a developer but trust me it is a huge leap for user experience! A friendly, intuitive interface not only makes the app more accessible but also provides a much smoother experience for users. Remember the days of typing into the VSCode Terminal? Well, now you do not have to!

A well-designed UI turns complex command-line interactions into simple, user-friendly actions, making your app not just functional, but delightful to use.

Running few tests in the pristine environment

Once I had the React version of the app running, I tested it with various queries to ensure it behaved as expected.

"Where can I buy ice Cream in Riga, Latvia?", "How many restaurants are there in Paris?"

"Where is Berlin?"

All questions delightfully appear on the screen! See for yourself how the text areas help us understand more about what happens behind the scenes.

Test my React app by acquiring the API keys and running the project.

Notes from the creator

After reading the first blog of the series the creator of ChatWithGS7 Michael Kaisser wanted to share some insights.

Regarding the query How many restaurants are there in Paris?, Michael explains:

"GS7 is not designed to return a full list. In fact, with default settings, it will only return 20 results for any given query at most."

For the query Why is the Taj Mahal famous? Michael received the following response from the chatbot:

"The Taj Mahal is famous for being a historical monument and a landmark attraction located in Agra, India. It is renowned for its architectural beauty, intricate craftsmanship, and the love story behind its creation. Commissioned by Mughal Emperor Shah Jahan in memory of his beloved wife Mumtaz Mahal, the Taj Mahal is considered one of the most exquisite and iconic symbols of love and devotion in the world."

Where he adds an important observation:

“This is a query that HERE's discover endpoint cannot answer. And although we explicitly prompted GPT to only use information for the JSON response, it is desire to be helpful makes it ignore the prompt and return information from the data it was trained on.” even though system_instructions_2 instructs to give the final response "solemnly based on the JSON object that is provided."

Final Thought

In short, software engineering is fun! Whether it is running queries through a terminal or building front-end applications, the journey is what makes it exciting. One thing is for sure, Information Technology has evolved dramatically since I first started learning it 14 years ago. Back in my study days, in Lund University, I was building a simple Machine Learning model that could transcribe text from images and it took me weeks to implement. Today, in less than 30 seconds, an AI model like ChatGPT can generate a fully functional front-end application and much more.

As Lionel Messi has said: “The day you think there is no improvement to be made, is a sad one!” With the rapid pace of technological advancement, it has never been a better time to push your boundaries, learn new things, and see how far you can go.

Happy coding!

Have your say

Sign up for our newsletter

Why sign up:

- Latest offers and discounts

- Tailored content delivered weekly

- Exclusive events

- One click to unsubscribe